Machine Play

Preamble

The only really cool software things that have come along in the past several years have been a rise of the machines in the form of Machine Learning, and serverless/microservice/Kubernetes clouds. The former is a complete framework game changer for humans, and the latter just a really cool evolution of a concept that's been lingering since the dawn of computing.

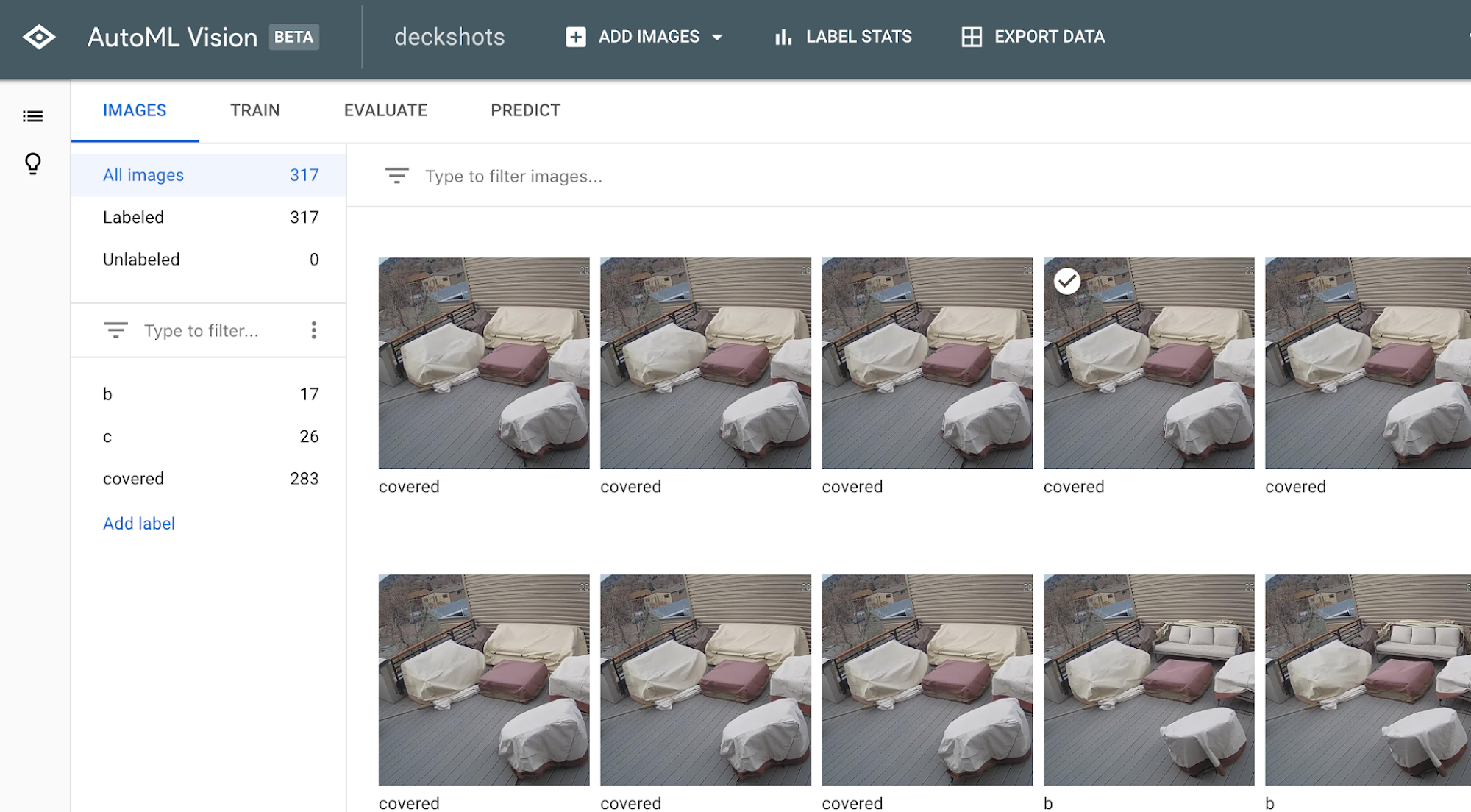

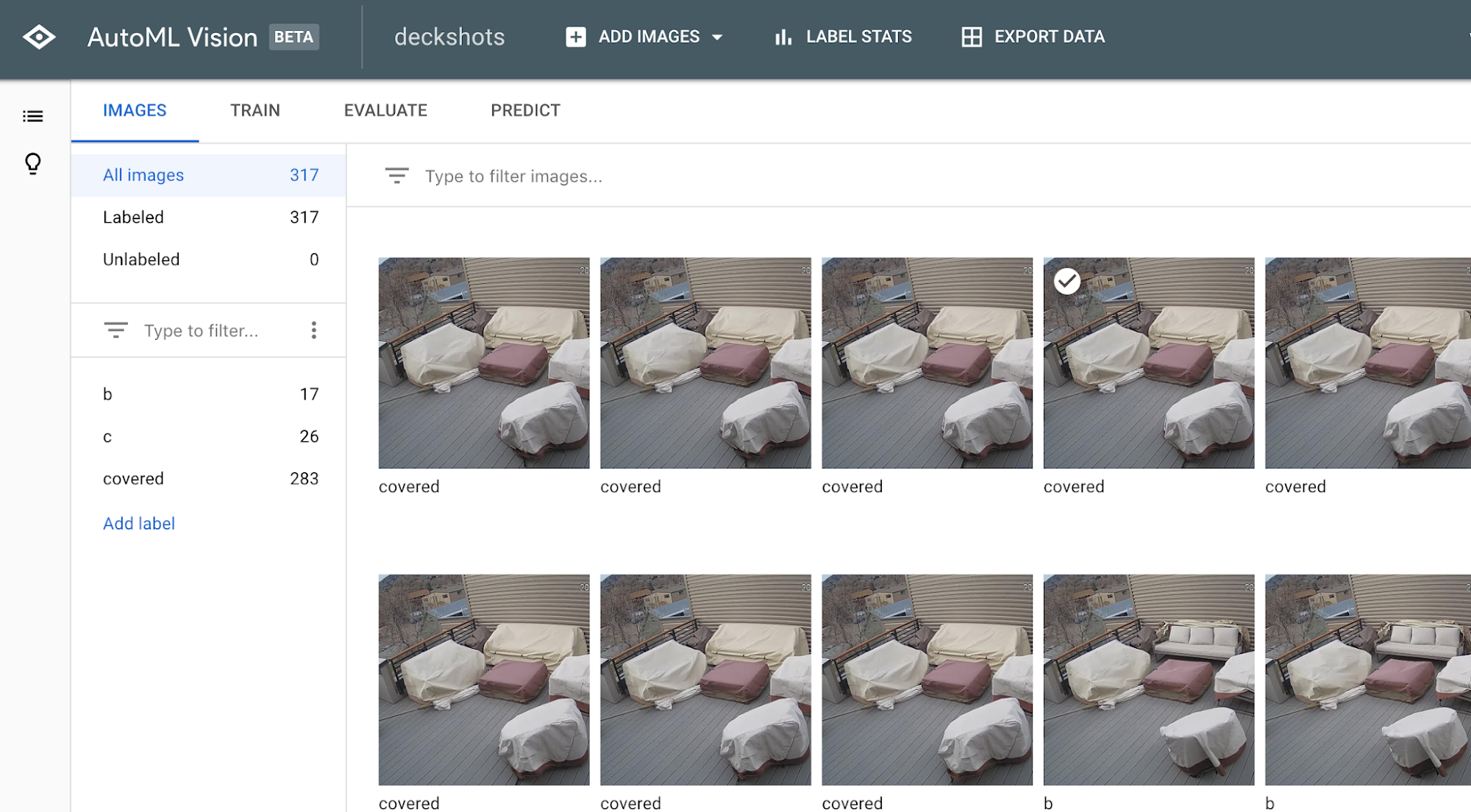

Amazon's foray into the computer vision market was DeepLens awhile ago. I thought it was odd that they combined both the software and hardware (camera; note, the camera is only suitable for indoor use) ends of the product. Why in the heck would you include a camera with a product like this!?! Several months later the Goog released access to their genericized ML backend; Google Cloud Vision in the back of AutoML was cast unto thee.

Well, after using Google's Cloud Vision and a 3rd party outdoor webcam to cobble together a system that SMSes me when one of the deck furniture covers gets blown off in the wind (project breakdown below), I now see exactly why Amazon bundled the two. I only wish they'd provide an outdoor-grade camera version of the offering. It turns out, unsurprisingly, that the pics you feed the backend are such an integral part of the training/prediction process, that tightly coupling the two is very important (well done Amazon Product Managers who realized this early). I believe Amazon also does onboard model prediction which sounds cool, but I'm not getting the necessity of this feature. I think the Goog got it right by just offloading the evaluation bit to the network (their modus operandi obviously) via URL image data retrieval. Sure there are applications for on-board execution, but, they seem more specialized than most of the use cases will require.

The Project

I cover the outdoor furniture on my rooftop deck with covers to protect them from the elements (Colorado is pretty tough on the weather front). It's often really windy up there and the covers regularly get blown off. The problem is that I don't get topside as often as I'd like, and the furniture could be left uncovered for awhile, exposing it to the damaging sun. So, I wanted to get a notification when they were blown off so I could go re-cover them. Obviously traditional image parsing/recognition solutions would be horribly unreliable and hard to use in order to accomplish this, so, enter ML. I wanted an outdoor-webcam to take pics of the deck and have Google Cloud Vision determine with high accuracy (90%+) whether or not a cover had been blown off, and text me if so.

The Pieces

- Input

- Amcrest outdoor IP Camera - mounted outside and aimed at the deck furniture. the camera hardware for this is great, the firmware/on-board HTTP server/app is awful and stuck in the late 1990's. if anyone has experience with a better outdoor IP webcam (no, Nest doesn't natively work), please let me know.

- Processing Nodes

- (LAN) - Raspberry Pi server (NOOBS OS) - image repository for the camera (filesystem) and server (HTTP) to serve up the latest image.

- (WAN) - Digital Ocean droplet (Ubuntu) - hosts the app

- Software

- my app/driver - Python script (code is here) that cron runs every ten minutes on the droplet.

- FTP server - vsftpd. the webcam's firmware design is as old as dirt and only talks FTP for snapshot images.

- bash script that cron runs every ten minutes for copying/renaming latest image capture to the HTTP server so the main app can access it.

- Google Cloud Vision - used to predict whether or not an image of my deck furniture has any of the covers blown off of it.

- Output

- Twilio API - used to send me a SMS message when a cover has been blown off.

Learnings

The process of building a model on someone else's engine is unbelievably simple. If you can imagine it... the computer can model it and predict it.Labeling image data is a major pain and very time consuming. While model prediction/execution is super fast after you've trained it, the labeling process required to train is horribly cumbersome. Looks like someone's entered the market to start doing the hard work for us; I'll give CloudFactory a try next time I need to build a model (which is pretty soon actually given that my cover configuration has changed already).We are going to accelerate from zero to sixty very quickly with ML backed image apps. I imagine app providers providing integration solutions with existing webcam setups that allow consumers to easily train a model for whatever visual scenario they want to be notified about (cat is out of food, plant is lacking water, garage door is open, bike is unlocked, on and on and on). Of course, you can apply all of this logic to audio as well. The future is going to be cool!

What Could Be Better

- I should collapse the file/FTP server and the app server onto either the WAN based Droplet, or the LAN based Pi server.

- The webcam. While the hardware is great, the software on the camera only supports SMB/FTP for snapshot storage. If the camera supported snapshot via HTTP I could forgo this interim image staging framework entirely. There might be joy in this forum post... I'll need to dig in and see; https://amcrest.com/forum/technical-discussion-f3/url-cgi-http-commands--t248.html

- I need to format the SMS message to be Apple Watch form-factor friendly.

- I need to reap/cull images after some duration.

- As far as I can tell Google Cloud Vision data models can't be augmented *after* they've been trained. I'd like to add revised image data without having to rebuild/retrain the entire model. This seems like a pretty big bug. All of the image ML prediction scenarios I can think of are going to trend toward wanting to augment/add new image data over time without having to maintain the original seed model data.